Introduction

This article provides an introduction to the use of natural language for accessing analytics data through generative AI models. Today, most analytics are provided through purpose-built applications and dashboards, or by trained data analysts and data science teams. Applications and dashboards are expensive to build and maintain and not everyone has access to a data analytics team.

We define the democratization of analytics as follows:

The democratization of analytics occurs when users have access to data analytics for their business domains through non-technical interfaces such as natural language based queries.

We’ll discuss how the application of generative AI to structured and unstructured data will power the democratization of analytics. Access to analytics is limited by resource-constrained data teams that provide needed analytics as well as the ability for users and organizations to predict specific analytics requirements. The consequence is that individuals and organizations operate with reduced information to support their decisions.

Readers don’t need a background in AI to get value out of this article. To support the widest possible audience, there is a glossary section at the end of this article with terminology relevant to this discussion. If you are new to these topics, the glossary section will help with the terminology in this document.

The Importance of Retrieval-Augmented Generation (RAG)

The Large Language Models (LLMs) that underpin generative AI models such as GPT and Gemini are not trained on your organization’s data and may not be trained on the terminology and calculations of your business domain. This makes it difficult to impossible for these models to answer specific analytics questions without help, and we suggest here that retrieval-augmented generation (RAG) is the best mechanism today to provide that help.

RAG is an important approach that allows generative AI models to act on data not available during the training phase of the model. With RAG, an application component intercepts a user query, determines the structured and/or unstructured data required, retrieves the data needed, and reformulates the prompt with the extra data and instructions required for generating an answer to the user query.

Here is a quick example of RAG using a chat-based interface. We can ask Gemini or ChatGPT about the visitors for a restaurant we are analyzing:

“How many visitors did Chet’s Diner - 1034 Maple Ave have in January 2024?”

However, neither of these generative AI platforms were trained on data about venue visitations. We’ll get a response such as, “As an AI language model, I don’t have access to real time data or databases.” We can work around this by recognizing this limitation and providing the data the AI model needs to answer the question. The following table shows a few rows of the data required as a sample. To fully support the response, we will use Unacast data to provide at least the daily visitors for all of January 2024, and perhaps more based on the scope of the user query.

We supply the structured data illustrated above in a prompt to the generative AI model. We can also rephrase the original user query to give the AI model further guidance, for example:

“You are a retail analysis expert. Provide the metric on the number of monthly visitors in January 2024 to Chet's Diner - 1034 Maple Ave using the following data: <insert the records here>. Describe how you actually calculated the number of monthly visitors.”

We manually performed the following steps:

- We intercepted the original user query: “How many visitors…”

- We identified the data that the generative AI model would need to answer this inquiry and we retrieved that data.

- We modified the prompt to rephase the query to use the supplied data, and added the data along with additional instructions.

- We submitted the prompt to ChatGPT as the modified user query and received an answer.

This is relatively straightforward and something any user can do with Gemini or ChatGPT. However, we can’t expect the analytics user to perform all of the above steps. If we do, we are not going to reach our goal of democratizing access to analytics data. The applications that we build, such as copilots that support natural language access to analytics and data, will provide these services on behalf of the user.

The Current State of Generative AI in Analytics

Many companies, such as Google, Snowflake, Microsoft Azure, OpenAI, LlamaIndex, Langchain, Haystack and more, are working to bring the vision of natural language access to data through generative AI and related technologies such as vector embeddings to market. The first three are not surprising — a majority of analytics is based on structured data and Google BigQuery, Snowflake and Azure SQL contain an amazing amount of structured data, and increasingly, unstructured data. Yet we shouldn’t count out the smaller players and new entrants into the space. These organizations support very useful frameworks and tools for building generative AI with RAG, and many of them work with multiple LLMs.

The key components bringing the vision of the democratization of analytics to fruition are:

- Text understanding & translation: natural language processing (NLP) through large language models (LLM)

- Retrieval-augmented generation (RAG): access to structured and unstructured data not available in the pre-trained foundational LLMs

- Metadata: a critical element in terms of training, verifying, and maintaining AI-based applications over time

Chat-like copilots and search bars are often the user interfaces to generative AI models, and similar UIs are currently being deployed inside of analytics applications. We’ll provide examples of Unacast’s application embedded, analytics co-pilot supported by generative AI and RAG in this article.

One last note before we move on. Generative AI can be used for many purposes, but this article is focused on its use in analytics. We will use both the terms “analytics copilot” and “AI-based analytics application.” AI-based analytics application is the broader term and includes analytics copilot applications.

We will also use the terms generative AI model and generative AI platform. Generative AI models refer to the underlying technology. A generative AI platform is a vendor-hosted generative AI model providing services to other organizations. Examples of these platforms include ChatGPT, Google Gemini, and Snowflake Cortex.

The Democratization of Analytics

The democratization of analytics through natural language is a heady topic. We’ve discussed the basics in the introduction, so let’s look at an example to make things more concrete.

Unacast’s location insights product provides retail and real estate analytics for customers. The user interface (UI) has been carefully designed to bring multiple types of information about venues and custom locations together on behalf of the user.

The following screen illustrates the Unacast Insights application and data on a specific venue. The UI in this example provides an overview of the brand, visualization of the location, an overview of foot traffic trends, and the top customer profiles for this venue.

From the UI, we can easily see that foot traffic to this Domino’s Pizza store is down in January 2024. That’s interesting information for a retail analyst, but we’ll move on to the examples of the analytics copilot.

Introducing Una — An Analytics Copilot

Una is the analytics copilot for the Unacast Insights product. Una does a great job of showcasing natural language access to structured and unstructured data. Una uses RAG and generative AI services to provide analytics using Unacast’s visitation, venue, and neighborhood data. Keep in mind that Una is brand new and still being trained. Una’s capabilities aren’t fully complete yet, but they are continuing to grow.

We can ask Una for more information about foot traffic to the Domino’s Pizza venue highlighted above.

The Mechanics of an Analytics Copilot

Let’s take a look at what happens when the user query is submitted in the example above. Here is a brief summary:

- The context is collected based on the application state.

- The copilot understands the initial context as being the Domino’s Pizza we were currently analyzing. We can test this by asking the copilot about its current venue context.

- To obtain the requested data on foot traffic, the natural language text of the user query is processed in a series of steps that yields the response. In RAG, the steps are classified as retrieval, augmentation, or generation.

Next, we need to obtain the data needed for the response that the generative AI platform doesn’t have:

- The user query (e.g. venue, foot traffic, specific venue) is mapped to an index of the documents relevant to the request. This is usually performed using a vector search operation, but there are other ways of performing this step. Documents here refer to both structured and unstructured data.

- The documents are retrieved.

Next are the augmentation and generation phases:

- The data from the retrieved documents is added to a prompt using a prompt template. The prompt will also include useful instructions to the LLM, along with context information (e.g. the current venue is Domino’s Pizza, 4012 Chicago Avenue).

- The completed prompt is submitted to the generative AI platform through its API.

- The AI model generates the response. The retrieved documents increase the amount of data available to the generative AI for answering the user query.

- The generative AI platform returns the response via the API.

The first step is augmentation, and the rest of the steps fulfill the generation.

Let’s continue to explore and see what else we can find out through the copilot. For example, what else can we learn about the visitors to this venue?

The analytics copilot can access other information, such as the Top Customer Profiles for the venue or information on the most popular days by visit counts for the venue. For the example below, data relevant to the terminology of the business domain (e.g. Top Customer Profile) and structured data (percentage of visitors as 38.34% for the Top Customer Profile), along with the unstructured data of socio-demographic definition for Near-Urban Diverse families is brought together through RAG to support generation of the response by the AI model.

In part 2 of this article, we’ll dive deeper into different ways RAG can be achieved along with the pros and cons of each.

More Examples With Una

We’ve been asking Una about the foot traffic to a venue through the visitation and related data we generate for venues, brands, and custom locations. In this example, as a new user, we are interested in information on the data products that Unacast provides. For the following user query, the RAG is using unstructured data to augment, namely the Unacast product documentation.

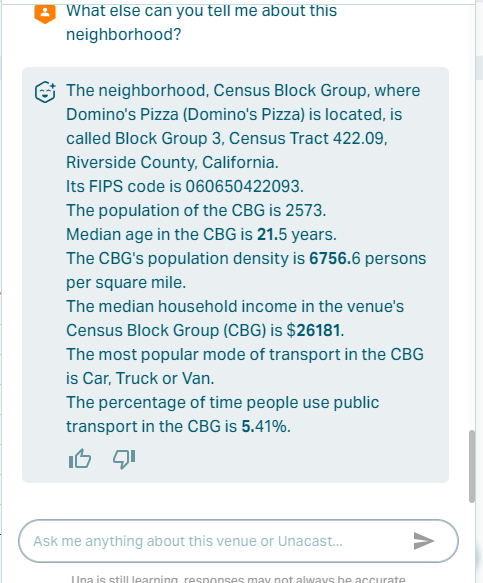

Now we’ll ask Una about neighborhoods, as Unacast also provides data about visitation patterns to broader areas. Unacast neighborhoods are based on Census Block Groups (CBG). Una provides its response from data on the CBG that this Domino’s store is located in. The analytics copilot is able to access the relationship between the current venue and the CBG that contains the venue to provide information about the CBG.

We can also ask a quick question about this neighborhood’s foot traffic.

Let’s move on to trade areas. Trade areas provide information on where visitors come from and are relevant for retail analytics, real estate analysis, and other use cases. Here we ask a basic trade area question:

Additional valuable analytics include comparisons of a particular venue to the brand as well as to the overall market that the brand participates in. The below example is for a Family Dollar store.

In case you are interested, Una uses Google’s Gemini for its generative AI platform component.

Review: Why We Need RAG

The examples above illustrate why we need RAG and not just generative AI. A generative AI platform will not contain our proprietary data on foot traffic to venues and neighborhoods. Even if it did, given the costs of training LLMs, the LLM is not likely to have the latest metrics. Here are a few examples showing why RAG is needed.

Here’s Gemini’s answer to a foot traffic query for this venue:

Likewise, it’s unrealistic for LLMs to be trained on data relevant to every business domain

Here is Gemini’s answer to the CBG that contains the Domino’s Pizza venue:

This is the nature of LLMs and the training data they have access to. Here is ChatGPT’s answer to the neighborhood for the Domino’s Pizza in question:

Fortunately, we have RAG techniques to provide the additional data we need for a generative AI platform to answer our questions. The LLM underlying the generative AI platform beautifully does what it is intended to do — understanding human language and incorporating a wide base of information. With RAG, a generative AI platform accepts new data through prompts and provides the understanding of semantics and context needed to interpret the query and generate the response. RAG delivers the private, proprietary, or up-to-date data required.

User Experience & User Interface Design with Natural Language

Natural language access to analytics and data enables new user interface (UI) design. Appropriately deployed, these new approaches to UIs can significantly improve the user experience. For example, using an analytics copilot, we can obtain answers to questions relevant to our research, but on different topics, without leaving the current page. We save time in navigating back and forth in the UI by using the copilot. This also includes time spent discovering where needed metrics are located within the application. The copilot can return links to relevant application pages that contain a broader presentation of analytics data for a specific topic.

Natural language access to analytics data will support significant changes to the UIs of applications. There is a growing trend towards bringing a user’s requested information to the current page so that there is no need to navigate away. We see examples of this already in search results, where the search engine summarizes the contents of pages in the result. This information can reduce navigation since the user can decide whether a link is useful without navigating away from the current page. Here’s an example from Google Search showing the embedded summarization of data within the results page:

The UIs for ChatGPT, Gemini, and other generative AI platforms can be considered single panes that bring information to the user without the user having to navigate to the data. Most users utilize ChatGPT or Gemini to access many different topics without having to follow links in a search result or navigate to a separate application for the information desired. The efficiency provided by the single pane approach is significant to both our personal and business lives.

It’s not a large leap to go from the analytics copilot chatbot UI as being a component of a page, to the natural language input field being directly integrated into the main page of the application. Future applications will morph the page to display information for the user’s query with summary and detail data, charts, and other visualizations. Menu-based navigation will continue to exist for the user who knows exactly what they want and where to get it, and for the times the application gets it wrong.

Summary

We introduced the democratization of analytics via natural language access powered by Generative AI and RAG. Using Una, Unacast’s analytics copilot and several examples, we explored the utility and power of natural language access to analytics. Along the way we briefly touched on the use of RAG within Una and why it’s needed. We also explored the impact of natural language interfaces on application design.

Through simple examples, we showed how RAG is straightforward. However, to fully meet user analytics needs in different domains, there are some additional considerations. In part 2 of this article, we’ll dive deeper into generative AI with RAG and options for implementation. If you are considering building your own AI-based analytics application, part 2 contains useful background and suggestions.

If you are interested in building an analytics application using location intelligence data, you can check out our available datasets at www.unacast.com.

Glossary

There are several natural language processing and artificial intelligence terms used seemingly interchangeably at times. We acknowledge being guilty of this. This glossary is provided with the intention of defining terminology that relates to the topic of this article.

Natural Language Processing (NLP)

Natural Language Processing, at its core, is the ability for computers to understand language. NLP predates large language models and generative AI, and it has been a computer science topic for more than 50 years. From its beginnings, it has been related to the field of linguistics. NLP is a broad term that covers additional topics such as Semantic Search, Large Language Models, and Generative AI. Language models in general support NLP.

Transformer Model

Transformer models provide significantly enhanced capabilities for capturing semantics and context. Transformer models were defined in the 2017 paper, “Attention Is All You Need,” which is a frequently referenced paper in generative AI.

Large Language Model (LLM)

Today, many Large Language Models (LLMs) are based on the transformer model, but this was not always the case. Before LLMs, NLP was supported by early language models such as Word2Vec and Doc2Vec. Recurrent Neural Networks (RNN) and Long Short-Term Memory (LSTM) models provided additional features for language models prior to transformers, but transformer models are the basis of LLMs such as BERT, BARD and GPT. Not all LLMs are generative, BERT (Bidirectional Encoder Representations from Transformers), is used for contextualized understanding of text, supports question and answer dialogues, but its responses are limited to the data it is trained on versus having the capability to generate new responses.

Generative AI

Generative AI are LLMs, primarily based on the transformer model, which are trained on large corpus of data and which can also create new and novel responses based on input to the model. Generative AI models are a subset of LLMs, but currently are the primary models being deployed. Generative models such as BARD and GPT are considered pre-trained transformer models and GPT standards for Generative Pre-Trained Transformer.

Just as in human learning, the training component is important, though human learning is more continuous than LLMs, at least as of today. If you have asked ChatGPT a question and been reminded that its knowledge is only up to a certain date, this is a symptom of the pre-training limitation. See Retrieval-Augmented Generation for a technique that can assist with pre-training limitations.

Generative AI Platform

A generative AI platform is a generative AI model trained and hosted by a vendor for use by other organizations. Access is typically through application programming interfaces (API) and user interfaces (UI). OpenAI’s ChatGPT, Google’s Bard, and Snowflake’s Cortex are examples of generative AI platforms.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) was developed to allow LLMs to operate on more recent data after they have been pre-trained. This is done by augmenting the prompt to the LLM with data it was not trained on. RAG may also be used to verify and constrain LLM question-and-answer output by comparing the output to the data it was trained on, helping to reduce hallucinations and incorrect answers.

RAG is an important technique for applying LLMs to analytics data.

Vector Search

Vector-based search (also called semantic search) improves the searching of documents and structured data by semantic mapping a query to a set of indexed documents. Vector search is a major step up from keywords searching and bag of words (BoW) techniques. Vector embedding libraries are used to generate vectors and the vectors are stored in a vector store. Vector search is implemented via one of several distance formulas; vectors nearer to each other in the vector space are more similar in terms of their semantics.

Prompts & Prompt Engineering

Prompts are (typically) text inputs to LLMs. They are the input into a trained model, and they are used in general training and fine-tuning training of models. Prompt engineering involves the creation and use of carefully considered prompts in order to further train and fine-tune a model.

Applications using generative AI are supported by the LLM’s API. For model training, fine-tuning, and verification, ML/AI Ops executes multiple series of prompts via scripts and APIs. Prompts are used to train models in terms of structured data outside of pre-trained LLMs as supported by RAG. Prompts are the usual mechanism by which users interact with generative AI models.

text -> SQL, text -> application

The above patterns are shorthand for patterns possible with generative AI models for implementing a general approach for natural language access to data. The pattern text -> SQL is shorthand for generative AI approaches aimed at querying relational databases through SQL. text -> application is shorthand for generative API approaches for natural language access through applications where the application could be a REST client, a headless browser or an API.

Semi-additive and non-additive facts/metrics

Certain metrics are not additive across dimensions. For example, we can’t add up the daily visitors to an airport to get an accurate count of the unique monthly visitors to an airport, as some passengers and especially employees will visit the airport on multiple days. We then say that the daily airport visitor metrics are not additive across the time dimension, making this an example of a semi-additive fact. Ratios are a type of metric that are usually considered non-additive.